==作者:cybsky==

[toc]

Unsupported major.minor version 52.0

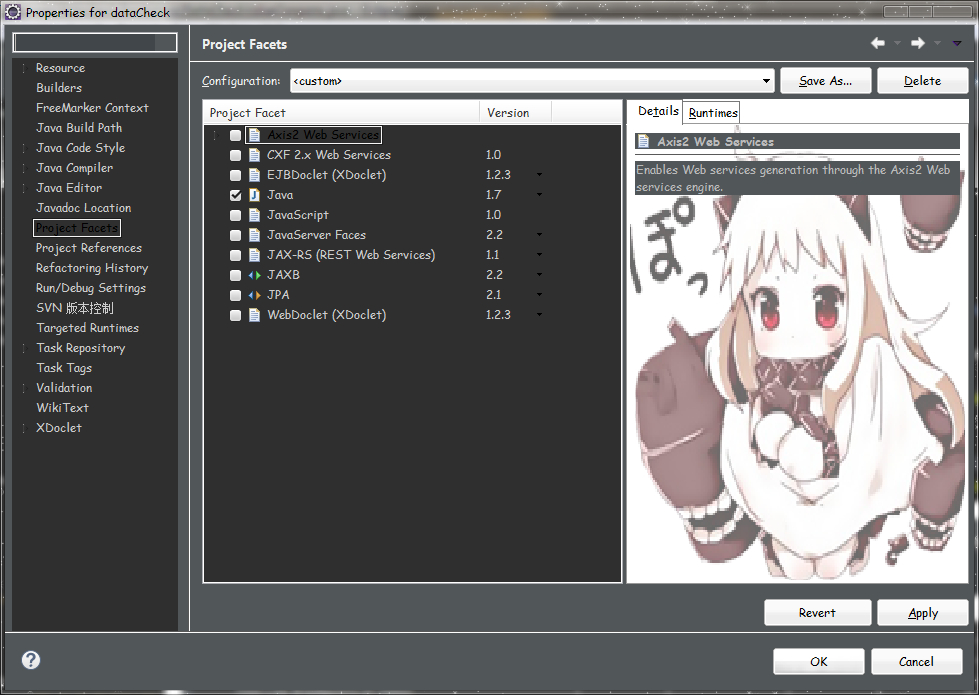

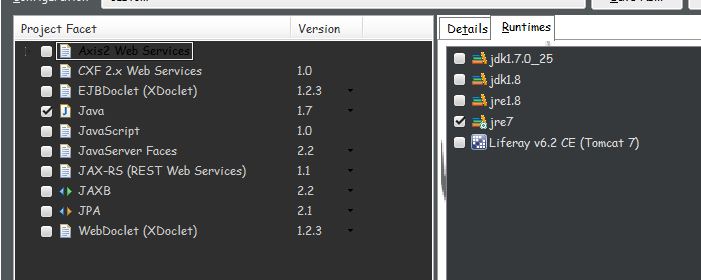

Java虚拟机报错,jdk版本不匹配,右键项目properties

当时jre7是灰色的,是不可用的,show all runtimes后选择jre8版本可以解决,或者等会再打开这个界面,会出现原来的版本jre7

空指针

今天出了个空指针的错,给String泛型的list添加(0+””)竟然给报错,经检查,是声明list的时候没有给它开辟空间,list添加0+””不会报错,会把0添加进去。

连接服务器使用hive连接时FileNotFoundException

log4j:ERROR setFile(null,true) call failed.

java.io.FileNotFoundException: /home/yarn/hadoop-2.6.0/logs/flume-hadoop/flume-hadoop.log (Permission denied)

at java.io.FileOutputStream.open(Native Method)

at java.io.FileOutputStream.<init>(FileOutputStream.java:221)

at java.io.FileOutputStream.<init>(FileOutputStream.java:142)

at org.apache.log4j.FileAppender.setFile(FileAppender.java:294)

at org.apache.log4j.FileAppender.activateOptions(FileAppender.java:165)

at org.apache.log4j.DailyRollingFileAppender.activateOptions(DailyRollingFileAppender.java:223)

at org.apache.log4j.config.PropertySetter.activate(PropertySetter.java:307)

at org.apache.log4j.config.PropertySetter.setProperties(PropertySetter.java:172)

at org.apache.log4j.config.PropertySetter.setProperties(PropertySetter.java:104)

at org.apache.log4j.PropertyConfigurator.parseAppender(PropertyConfigurator.java:842)

at org.apache.log4j.PropertyConfigurator.parseCategory(PropertyConfigurator.java:768)

at org.apache.log4j.PropertyConfigurator.configureRootCategory(PropertyConfigurator.java:648)

at org.apache.log4j.PropertyConfigurator.doConfigure(PropertyConfigurator.java:514)

at org.apache.log4j.PropertyConfigurator.doConfigure(PropertyConfigurator.java:580)

at org.apache.log4j.helpers.OptionConverter.selectAndConfigure(OptionConverter.java:526)

at org.apache.log4j.LogManager.<clinit>(LogManager.java:127)

at org.slf4j.impl.Log4jLoggerFactory.getLogger(Log4jLoggerFactory.java:66)

at org.slf4j.LoggerFactory.getLogger(LoggerFactory.java:270)

at org.apache.commons.logging.impl.SLF4JLogFactory.getInstance(SLF4JLogFactory.java:155)

at org.apache.commons.logging.impl.SLF4JLogFactory.getInstance(SLF4JLogFactory.java:132)

at org.apache.commons.logging.LogFactory.getLog(LogFactory.java:657)

at org.apache.hadoop.util.VersionInfo.<clinit>(VersionInfo.java:37)

log4j:ERROR Either File or DatePattern options are not set for appender [File].

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/yarn/hadoop-2.6.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

这个是因为使用的用户的权限不够

noClassFound一般是jar包依赖

Java操作phoenix连接需要配置host文件

url:C:\Windows\System32\drivers\etc

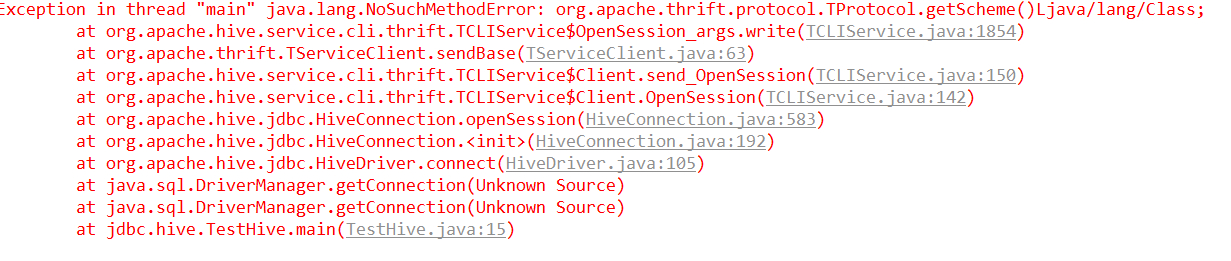

连接hivejdbc的时候一个报错

一番查找之后发现是phoenix-4.6.0-HBase-1.1-client.jar导致的

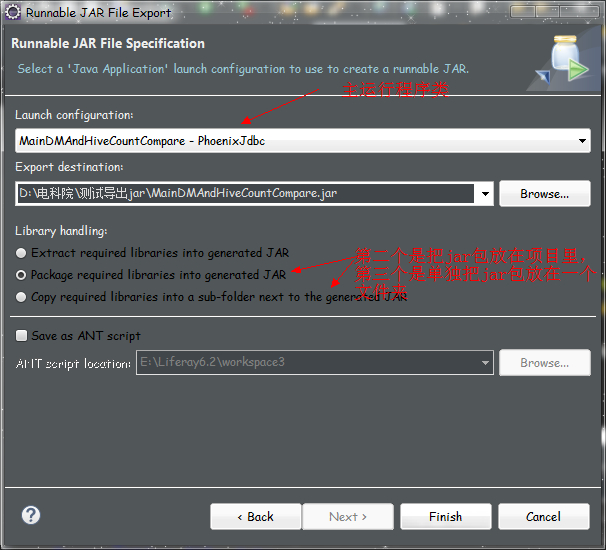

fat-jar打包

用fat-jar打成jar包放在服务器上运行,有可能会丢失依赖的jar包导致程序运行出错,或者不报错却查不出数据,使用eclipse本身自带的打jar工具,右键export,选择Java里的runnable JAR file

改变项目路径的时候,需要把新的路径–build path–use as source folder

build的路径会成为固定路径 比如com.baidu.demo

如果只有com包就build,.java的package为baidu.demo

上传maven项目时

-Dmaven.multiModuleProjectDirectory system property is not set. Check $M2_HOME environment variable and mvn script match.

.添加M2_HOME的环境变量

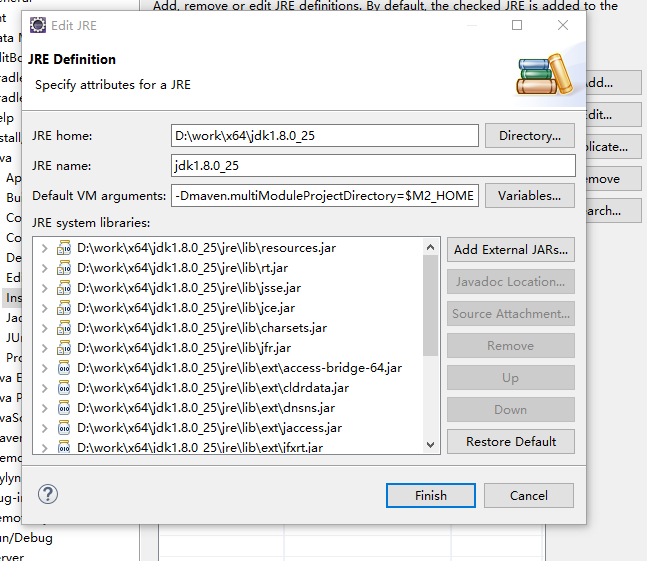

2.Preference->Java->Installed JREs->Edit 选择一个jdk,

添加 -Dmaven.multiModuleProjectDirectory=$M2_HOME

-Dmaven.multiModuleProjectDirectory=$M2_HOME

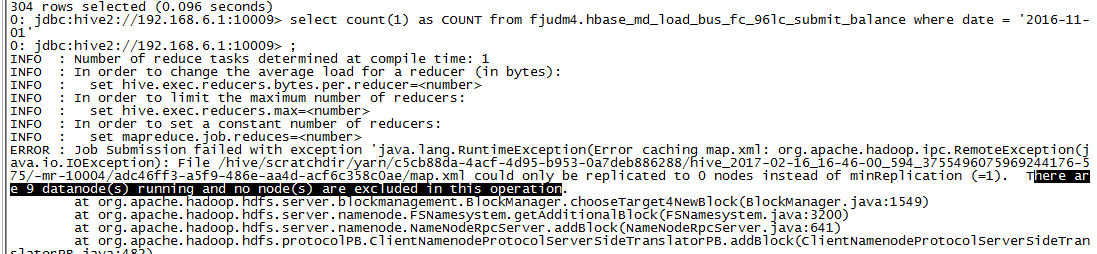

执行hive查询时 Error while processing statement: FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.mr.MapRedTask

java.sql.SQLException: Error while processing statement: FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.mr.MapRedTask

at org.apache.hive.jdbc.HiveStatement.execute(HiveStatement.java:296)

at org.apache.hive.jdbc.HiveStatement.executeQuery(HiveStatement.java:392)

at org.apache.hive.jdbc.HivePreparedStatement.executeQuery(HivePreparedStatement.java:109)

at com.sgcc.epri.dcloud.dm_hive_datacheck.query.HiveQuery.queryHiveLoadCount(HiveQuery.java:39)

at com.sgcc.epri.dcloud.dm_hive_datacheck.common.ReadExcelAndCompare.readLoad(ReadExcelAndCompare.java:90)

at com.sgcc.epri.dcloud.dm_hive_datacheck.main.MainLoad_Forecast.main(MainLoad_Forecast.java:14)

这是hdfs没有空间了

hive查询聚合函数 找不到COUNT这个列

使用count = rs.getString(1);来取 不要用count = rs.getString(“COUNT”);

select count(1) as COUNT from fjudm4.hbase_md_load_bus_fc_96lc_submit_balance where date = '2016-01-01'

java.sql.SQLException

at org.apache.hadoop.hive.jdbc.HiveBaseResultSet.findColumn(HiveBaseResultSet.java:81)

at org.apache.hadoop.hive.jdbc.HiveBaseResultSet.getString(HiveBaseResultSet.java:484)

at com.sgcc.epri.dcloud.dm_hive_datacheck.query.HiveQuery.queryHiveLoadCount(HiveQuery.java:47)

at com.sgcc.epri.dcloud.dm_hive_datacheck.common.ReadExcelAndCompare.readLoad(ReadExcelAndCompare.java:120)

at com.sgcc.epri.dcloud.dm_hive_datacheck.main.MainLoad_ForecastCount.main(MainLoad_ForecastCount.java:16)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.eclipse.jdt.internal.jarinjarloader.JarRsrcLoader.main(JarRsrcLoader.java:58)

maven配置ojdbc

因为ojdbc是收费的!(/哭)只能自己下载ojdbc对应的版本jar包,在当前路径打开dos,比如我是在桌面,我执行命令将它安装到本地仓库

mvn install:install-file -DgroupId=com.Oracle -DartifactId=ojdbc14 -Dversion=10.2.0.2.0 -Dpackaging=jar -Dfile=C:\Users\Administrator\Desktop\ojdbc14-10.2.0.2.0.jar